Copyright © W. J. Heitler (2019)

Networks

Neurons interact with one another through synapses, as described previously. However, the point of such interactions is usually to produce circuits, or networks of neurons, that in combination can produce an output which is behaviourly relevant to the animal. It is a classic case of "the sum being greater than the parts" - individual neurons have a limited repertoire of output, but a network of neurons may have emergent properties that go beyond what the individual neurons within the network can produce.

This chapter considers the following topics involving network simulations using Neurosim.

- Rhythm generating networks.

- Stochastic resonance.

- Lateral inhibition.

- Pre-synaptic inhibition (including primary afferent depolarization)

- The Jeffress mechanism for auditory localization.

- Networks mediating associative learning.

- Wilson-Cowan firing rate models

Central Pattern Generators

Many biological activities involve rhythmic oscillations. For instance, locomotion usually involves 2 phases of activity known as the stance and swing phase, or the power and return stroke. For legged locomotion the stance/power phase is when the leg is on the ground and pushing back, the swing/return stroke is when the leg is lifted and returning forward to its starting position. Similar oscillations are found in many other locomotor activities, including flight and swimming, and also non-locomotor rhythmic activities such as breathing.

Most locomotor rhythms that have been investigated have been found to continue even after the removal of all sensory input. This means that the basic circuitry for generating the rhythm must reside entirely within the central nervous system. These circuits are called central pattern generators (CPGs). Some CPGs have single-cell endogenous bursters at their core (although often there is a pool of such neurons coupled by electrical synapses). However, most CPGs rely on networks of neurons to generate the rhythm.

As far back as 1914, a Scottish physiologist (and mountaineer) called Thomas Graham Brown proposed what he called the “half centre” model. The stance and swing phases were supposed to each be generated by half of a locomotor centre, and the two halves inhibited each other (reciprocal inhibition), thus resulting in a rhythm when the locomotor centre as a whole was excited by a continuous, non-rhythmic excitatory signal descending from some command centre in the brain.

Flip-Flop Circuit

In its essential details, reciprocal inhibition has been found to be at the heart of most rhythmic systems that have so far been investigated. However, there is a crucial element missing from the model in its simplest form – the effective inhibition mediated by each side must somehow weaken with time, or the system locks up permanently in one phase of the other.

Note the circuit contains two neurons which are reciprocally connected by inhibitory synapses. But also note that the drug picrotoxin has been applied, so the synapses are blocked. Both neurons are spiking continuously due to tonic excitatory input from “the brain” (actually, just tonic current input). A small amount of random noise has been added to each neuron so that the spikes are not exactly synchronous.

Two separate brief external excitatory stimuli (the square boxes) are applied to N1 and then N2 in sequence, but at the moment all these do is temporarily increase the spike rate of the receiving neuron. Their purpose will become apparent shortly.

This shows what the neurons do without the reciprocal inhibition. What happens when we enable it?

- Uncheck the picrotoxin drug box to remove the synaptic block.

- Click Start. You don’t have to click Clear because Results: Auto clear has been selected.

There may be a few spikes which are exactly synchronousNote that without the noise, the synchronous spikes would continue with each side inhibiting the other at exactly the same time, and therefore with equal effect, until the external stimulus unbalanced the activity. This is really a computer artefact, since there is always some noise in real neurons. with each other, but fairly soon, one side or the other “wins”, and inhibits the other. It is random which side wins – it depends on which spikes first. The inhibition continues until the inhibited neuron receives the brief external stimulus. Then it wins, and inhibits the other.

- Click Start several times.

Sometimes N1 wins initially, sometimes N2. But the losing neuron is flipped into winning when it receives help from the external stimulus. If the excitatory stimulus arrives in a neuron that is already winning, the extra spikes just increase the inhibition onto the losing neuron. Note that you could also flip the state by briefly inhibiting the winning neuron, rather than exciting the losing neuron. You can try this out by adjusting the stimulus parameters if you wish.

The circuit thus acts as an electronic flip-flop (a genuine name for an electronic circuit component) which can latch into either state, but be flipped to the other by a brief extra input. Such circuits may be useful in the nervous system when there are two exclusive behaviour options, but the animal needs to be able to switch between them, perhaps in response to a sudden sensory input. An example might be a switch from forward to backward crawling, if the crawler met an obstacle. (This is just hypothetical – I’m not aware of any evidence for such a circuit.)

Reciprocal Inhibition Oscillator

- Load and Start the parameter file Reciprocal Inhibitory Oscillator (v5-2). (You will probably have to set a Slow down factor to see things clearly.)

The system now oscillates! The circuit is exactly the same, except that the inhibitory synapses have been alteredThe synapse properties are set using the Synapses: Spiking chemical menu command to access the Spiking Chemical Synapses Types dialog. You can then select type b: hyperpolarizing inhibitory from the list, and note that the Relative facilitation (near the bottom-right of the dialog has a value of 0.9. Values less than 1 mean that a synapse of that type anti-facilitates (decrements). so that the inhibition decrements (anti-facilitates) with time. The inhibition from the winner thus gets weaker and weaker until the loser can escape from the inhibition and become a winner. Then its inhibition weakens in turn, while the previously weakened inhibition recovers during the time when its pre-synaptic neuron is silent.

[Remember: Facilitation and anti-facilitation are frequency-dependent pre-synaptic phenomena in which, when the synapse is activated repeatedly, the amount of transmitter released per spike is modulated; increased during facilitation, decreased during anti-faciliation. The synapse returns to its baseline release rate after a period of inactivity.]

Picrotoxin is a drug that blocks many inhibitory synapses, including those in this network. It is a non-competitive GABAA receptor blocker, and thus works on the post-synaptic neuron. It does not affect the pre-synaptic neuron, which releases transmitter as usual.

- With the simulation running, check the picrotoxin box in the Experimental Control panel.

With the inhibition blocked, the individual neurons revert to tonic activity reflecting their underlying central excitatory drive.

- While the simulation continues to run, uncheck the picrotoxin box.

The neurons continue with the tonic activity – they do not return to oscillation.

Question: Why don’t the oscillations return when we take away the drug blocking inhibition? [Hint: remember which synaptic property allowed the oscillations in the first place and where the drug works.]

- While the tonic activity continues, click Stim in the Results view to manually inject a negative current pulse stimulus into N1.

This should restore the oscillations. Does this fit with your answer to the previous question (or perhaps help you to answer it if you were stuck)?

Take-home message: Oscillations can be generated by reciprocal inhibition between neurons, even when none of the individual neurons within the network has any tendency to oscillate in isolation. However, there has to be some mechanism, such as synaptic anti-facilitation, to prevent one neuron permanently inhibiting the other.

Multi-Phase Rhythms

Generating a rhythm is only part of what is needed for locomotion – most movement involves the coordinated activity of several limbs and several muscles within each limb. We only know a little about how such coordinated activity arise, but much research has been done on potential mechanisms.

In legged locomotion there are actually four phases – leg up, leg forward, leg down and leg back. A simple elaboration on the half-centre model can generate this pattern.

- Load and Start the parameter file Multi-Phase Oscillator(v5-2). As previously, you will probably need to set a Slow down factor.

This shows a four phase progression which could drive a leg.

- Click Pause after you have observed a few cycles of the rhythm.

If you look at the network in the Setup View, you can see that there are actually 2 mutually inhibitory half-centres present, the diagonal pairs N1 and N3 (labelled up-down) and the diagonal pairs N2 and N4 (labelled forward-back). However, these are coupled in a ring of one-way inhibition, such that each element inhibits (and thus terminates the activity of) the neuron that was active in the preceding phase. This one-way inhibition is organized in a clockwise direction, and activity in the network thus progresses in an anti-clockwise direction (N4→ N3→ N2→ N1→ N4 etc.). (You can see this by watching the colours in the Setup view.)

In this 4-neuron network none of the synapses have to decrement in order to get oscillation. Looking at the activity in detail can explain why.

Pick a time when N1 has just started spiking.

- Place a vertical cursor on the first N1 spike in the cycle, so that you can clearly see what is happening at that time.

N2 was spiking, but it receives inhibition from N1, so it stops spiking and its membrane potential starts to hyperpolarize. N3 was already inhibited, and it too receives inhibition from N1 so it remains hyperpolarized. However, N4 is not being inhibited by N1 because there is no synaptic connection, and N2 and N3, which could inhibit it, are both themselves inhibited and so silent. So the N4 membrane potential starts to recover and depolarize (it was inhibited in the previous phase). Eventually, its membrane potential reaches threshold and it starts to spike. It then immediately inhibits N1, and the rhythm moves on to the next phase of the cycle.

And so it goes on …

- Click Continue to resume oscillations.

- While the simulation is running, check, and then uncheck the picrotoxin box in the Experimental Control panel.

The difference in response with removal of picrotoxin between this and the reciprocal inhibitory circuit described previously can be explained by the change in synaptic properties (decrementing vs non-decrementing) between the circuits.

Question: What would happen if one neuron in this circuit were damaged or destroyed? In a real experiment, this could potentially be achieved with dye-mediated laser photo-ablation (Miller & Selverston, 1979).

- With the model running, select the menu command Neuron: Zap. This pauses the simulation until you click on a neuron (your choice which). This “zaps” the neuron – it effectively destroys it and takes it out of the circuit just like laser photo-ablation . Observe what happens. Did it fit your prediction?

- Unlike in reality, we can resurrect a neuron with the Neuron: Un-Zap command. This means that you can un-kill your selected neuron, and kill another one. Trust me, this is a lot quicker and easier than doing real experiments!

[Optogenetics: In some experimental preparations you can achieve reversible inactivation of specific neurons if you can manipulate the cell to express a light-activated chloride channel. Switching a light on then massively inhibits that neuron (assuming it has a hyperpolarizing chloride reversal potential) and stops its activity, which is restored when the light is switched off.]

It is quite difficult to understand what is going on in this circuit, but if you think through the network carefully, you can figure out how this works. On the other hand, that is precisely the point of modelling – it would be a bold neuroscientist who would bet money (or their reputation) on exactly what the output of this circuit would be under different conditions, but by modelling we can find out!

Sensory Feedback

There is ample experimental evidence that central pattern generators really do exist – in many animals the nervous system can generate rhythms in the absence of sensory feedback. However, there is also ample evidence that sensory feedback normally plays an important role. Animals obviously have to adjust a locomotor rhythm in the event of unexpected external stimuli – if you trip up, you will take an extra rapid step to avoid falling on your nose. But also, most rhythms run faster in the intact animal with sensory feedback present than they do when the CNS is isolated from such feedback.

Sensory feedback may accelerate rhythms simply by providing additional general excitation to the nervous system. However, they can also act directly on the pattern generator itself.

- Load and Start the parameter file Sensory Feedback (v5-2).

In this circuit N1 and N2 at the top are a reciprocal-inhibitory half-centre oscillator like we saw earlier. They drive flexion and extension as labelled in the Setup view. Each half-centre activates a peripheral muscle (N3 and N6), and each muscle activates a peripheral sense organ (N4 and N5). These mediate negative feedback onto their half of the pattern generator. The delay of this feedback has been set to 200 ms, to account for the time taken for muscle contraction and axonal conduction.

The system generates 6 cycles of oscillation in the duration of the simulation.

- Apply the drug curare by checking the box in the Experimental Control panel.

With the current settingsMenu Options: Run on change and Results view Auto clear, this clears the screen and re-runs the experiment with the drug applied.

Curare (the famous South-American poison arrow drug) blocks nicotinic acetylcholine receptors and hence paralyses muscles and so disables the feedback. So in the presence of curare, the CPG oscillates at its intrinsic, free-running, frequency with no sensory feedback.

The oscillator now only generates slightly more than 4 cycles in the duration of the simulation. It is clearly running slower.

In this case, the cause of the frequency change is that the negative sensory feedback in the intact system is timed so as to truncate each burst of its half-centre driver, thus releasing the other half-centre early from its inhibition. This truncation allows the whole system to oscillate faster.

This is very much a “thought experiment” simulation. It illustrates one way in which sensory feedback could influence CPG frequency, but it is undoubtedly a massive simplification of the way real systems work.

Phase Resetting Tests

The frequency (or period) and phase are key characteristics of an oscillator, and if you do something experimentally that alters either of these, then you know that you have somehow affected the rhythm generation mechanism itself.

- Load and Start the parameter file Reset Test (v5-2).

Two neurons are visible and both oscillate. However, the program has been set up to hide any connections that might exist. This makes things a bit more realistic, since in a real experiment you cannot normally see connections between neurons (you are lucky if you can even see the neurons!).

So are both neurons endogenous bursters, or is it a network oscillator, or some sort of mixture?

Note that at the far right of the Results view, both neurons end the simulation run just at the completion of a spike burst.

- Set the amplitude of stimulus 1 to +0.5.

- Click Start without clearing the screen first.

The first part of the sweep is unchanged, but the stimulus induces a large burst of spikes in N1 and N2 (even though the stimulus was only applied to N1), and after that, things change. At times when the neuron was previously spiking it is silent, and when it was silent, it is spiking. In the new sweep the spikes “fill in the gaps” in the previous sweep.

- To see this more clearly, set the Highlight sweep to 1 in the Results view, and then change it to 2.

Note that while previously, with no stimulus, the sweep ended at the end of a spike burst in both neurons, it now ends just after the start of a spike burst in both neurons. This is because the stimulus forces the neurons to produce a burst of spikes earlier than they would have normally, and then after this early burst, the bursts continue at the same intervals that they had previously. The stimulus pulse has thus reset the phase of the rhythm, indicating the neuron 1 is part of the rhythm-generating circuit. The question now is, is N2 also part of the rhythm-generating circuit?

- Click Clear.

- Switch the stimulus to apply it to N2.

- Either drag the stimulus box in the Setup view and drop it on N2,

- or set the Target Neuron in the Experimental Control panel to 2.

- Click Start.

The stimulus now induces an early burst of spikes in N2 but not in N1. Furthermore, this does not reset the phase of the rhythm. To confirm this:

- Do not click Clear.

- Set the stimulus amplitude to 0.

- Click Start.

With the two sweeps superimposed, you can see the early burst in N2 produced by the stimulus, but you can see that this has no effect on the rhythm – the bursts continue on afterwards just as though there was no stimulus at all.

This tells us that N2 is a “follower” neuron that does not actually participate in generating the rhythm. It is simply driven by a rhythm that is generated elsewhere – which in this case must be N1, since there is nothing else.

To see the actual circuit:

- Click Connexions in the drop-down menu at the top of the main view.

- Note that there is a tick-mark beside the Hide connexions menu option.

- Click that option to de-select it and show connexions.

You can now see that N1 makes a non-spiking excitatory synapses onto N2. N1 is indeed an endogenous burster, and N2 is simply following the activity of N1 through its synaptic input. Anything you do to N2 has no effect on N1 because there is no synaptic connexion from N2 to N1.

Tadpole Swimming: A case study

Tadpoles may sound like rather obscure animals for a neuroscienist to study, but in fact the neural mechanism controlling swimming in hatchling tadpoles (charmingly known as polywigglesFrom old English poll = head (as in poll tax) and wiggle = wiggle! in Norfolk!) is one of the best understood vertebrate CPGs (Roberts, et al., 2010). At a behavioural level, swimming can be initiated by a brief sensory stimulus (touch) to one side of the body, and is driven by wiggling the tail in left-right alternating cycles at 10-25 Hz, with a wave that propagates from head to tail. The core mechanism depends on interactions between just two types of interneurons: descending interneurons (dINs) and commissural interneurons (cINs). These occur as separate populations on the left and right side of the animal, but the dINs on each side are coupled to each other, so that they excite each other and essentially act as a single unit. The dINs excite trunk (myotomal) motorneurons on their own side of the spinal cord, so if the left dINs spike, the tail bends to the left, and if the right dINs spike, the tail bends to the right.

DINs are glutamatergic neurons that arise in the hindbrain and rostral spinal cord. Because of their mutual excitation they develop a long-lasting NMDA-receptor mediated depolarization during swimming, and it is this depolarization that maintains swimming for episodes that can last from a few seconds to more than a minute. The dINs also drive the ipsilateralIpsilateral means on the same side, as opposed to contralateral which means on the opposite side. cINs through brief AMPA-receptor mediated EPSPs. The cINs are gylcinergic and feed inhibition back to the dINs, but on the other (contralateral) side of the spinal cord. It is thus the cINs that provide the reciprocal inhibition necessary to produce the alternating swimming rhythm. Unilateral lock-up is prevented by the cellular properties of the dINs, which each only spike once for a given depolarization. In order to spike again, there has to be an intervening hyperpolarization, which is provided by the cIN feedback. Thus during swimming the dIN spikes are triggered by rebound excitation from the IPSPs generated by the cINs.

The following simulations are based on parameters modified from Sautois et al., (2007). We will build up the circuit gradually, so that you can see the contribution of the components at each stage.

Basic dIN and cIN properties

- Load and Start the parameter file Tadpole dIN cIN.

There are two neurons, a single dIN and a single cIN, but there is no connection between them. Each receives stimuli (1 - 3), but these initially have 0 amplitude, so the traces are flat.

- With stimulus 1 selected, click the up arrow of the amplitude spin button in the Experimental Control panel and observe the effect of the long-duration stimulus in the dIN - initially it is a simple sub-threshold depolarization.

- Repeatedly click the up arrow until the amplitude reaches 0.1 nA.

The dIN starts to spike when the amplitude reaches 0.06 nA, but it just generates a single spike, even when the stimulus exceeds threshold. As you continue to increase the stimulus, the dIN produces some post-spike oscillations, but it does not generate more than just the single initial spike. This is a rather unusual property for a neuron, but it is characteristic of dINs in the tadpole.

- Select stimulus 2 (by clicking it in the list or clicking its box in the Setup view), and click the up arrow of the amplitude spin button to inject depolarizing current into the cIN.

- Repeatedly click the up arrow until the amplitude also reaches 0.1 nA.

The cIN has a slightly lower threshold than the dIN, and once threshold is exceeded it generates multiple spikes, which increase in frequency as the stimulus strength increases. This is a more normal neural response to stimulation. The cause of the difference lies in the kineticsIf you wish you can double-click a neuron to open its Properties dialog and then examine the voltage-dependent channels. But unless you're really interested you don't need to get into that level of detail if you just accept the properties at a functional level. of the voltage-dependent ion channels in the two neurons.

- Select stimulus 3 . Note that this stimulus is applied to both neurons.

- Click the down arrow of the amplitude spin button, to inject a brief pulse of negative current (-0.01 nA) into both the dIN and the cIN.

The dIN, which is depolarized but not spiking at the time of the negative pulse, responds by generating a small spike at the termination of the pulse. This is a case of rebound excitation as demonstrated earlier in the classic HH model, and has the same underlying cause: relief of inactivation of voltage-dependent sodium channels, and closure of open voltage-dependent potassium channels.

- Click the down arrow of the stimulus 3 amplitude spin button a few more times until the amplitude reaches -0.05 nA.

Note that increasing the hyperpolarization increases the size of the rebound dIN spike, and blocks spikes in the cIN.

Next we start to connect the neurons:

- Load and Start the parameter file Tadpole dIN cIN PIR.

As before, the upper neuron in the Setup view is a dIN, and it now makes an NMDA-type excitatory connection to itself (the blue diamond labelled c). This synapse represents the re-excitation of the whole population of dINs caused by the reciprocal synapses that they form with each other. The lower neuron is a cIN, and if it spikes, it will inhibit the dIN through its glycinergic synapse (the blue diamond labelled b).

Initially, the cIN is silent, but the dIN spikes in response to stimulus 1. The recurrent dIN excitation is visible as a long-lasting depolarization following the spike.

- Apply the drug AP5 (amino-5-phosphonopentanoic acid) by checking its box in the Experimental control panel. This is a selective antagonist of the NMDA receptor, and it abolishes the depolarization following the dIN spike.

- Remove the AP5, and click the up arrow of the amplitude spin button of stimulus 2. This stimulus makes the cIN spikeIn passing, note that the cIN spike is briefer than the dIN spike. The dIN spikes are in fact unusually broad for vertebrate spiking neurons., which in turn produces an IPSP in the middle of the self-induced depolarization of the dIN. The dIN generates a rebound spike as its membrane potential recovers following the IPSP.

- Apply the drug strychnine, which blocks glycine receptors. The IPSP disappears, as does the rebound dIN spike.

- Finally, remove the strychnine and re-apply AP5. The dIN only produces a rebound spike if the IPSP occurs during the activity-induced depolarization - the dIN does not spike on rebound from an IPSP occurring at resting potential.

The core swimming circuit

Now that we have seen the key properties of the individual dINs and cINs and how they interact with each other, we can build a minimalist CPG circuit.

- Load and Start the parameter file Tadpole Core CPG.

First look at the circuit in the Setup view. There are 4 neurons in total, comprised of a left-right pair of dINs (N1, N3 in the top row) and a left-right pair of cINs (N2, N4 in the bottom row). Each dIN excites itself through a long-lasting NMDA-type synapse (c), and its ipsilateral cIN through a brief (phasic) AMPA-type synapse (a). Each cIN inhibits its contralateral dIN through a phasic glycinergic synapse (b). Of course, each neuron in the model represents many neurons in the real animal, and the whole circuit is replicated many times along the length of the spine. So this is very much a simplified "concept model" of the real situation.

Now look at the Results view. Swimming is initiated by separate stimuli (bottom trace) applied to the left and right dINs, but once initiated it is self-sustaining. We will come back to the initiation later, but for now concentrate on swimming itself.

Swimming is characterised by alternating spikes in the left and right dINs (top and third traces, brown and magenta - each magenta spike occurs betweenOne easy way to check this is to drag the magenta trace up so that it overlays the brown trace. You can then see the relative timing. You can restore the position by resetting the top and bottom axes scales for the 3rd axis back to +40 and -70. two brown spikes). Since each dIN excites motorneurons on its side of the spine, this will generate side-to-side movement of the tail, resulting in swimming in the real animal. Note that the dIN spikes occur on top of a sustained depolarization caused by the recurrent excitation, which is interrupted periodically by IPSPs generated by the cINs (second and fourth traces, green and blue). In contrast, the cIN spikes result from brief EPSPs arising from the resting potential - there is no sustained depolarization.

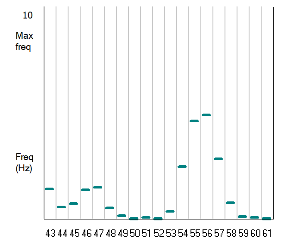

- Select the Analyse: Timing menu command to display the Frequency etc vs Time dialog.

- Adjust the top Y axis to 50 Hz, and note that the dIN spike frequency stabilizes at about 25 Hz, which is within the range of normal swimming behaviour.

- Close the dialog.

Now examine the timing of the activity in the 4 neurons, ignoring the start of the simulation (because initiation is a tricky issue that we will return to).

- Click the expand timebase (

) button in the Results tool bar three times, and then click the Go to end button (

) button in the Results tool bar three times, and then click the Go to end button ( ). You should now have a "zoomed in" view of the last part of the simulation.

). You should now have a "zoomed in" view of the last part of the simulation. - Click the vertical cursor (

) in the Results toolbar, to give yourself a datum to see relative timing.

) in the Results toolbar, to give yourself a datum to see relative timing. - Drag the cursor to line up with the peak of the first visible dIN spike in the top trace.

The left dIN (top trace, brown) activates the left cIN (second trace, green) with short latency, and this in turn generates a short-latency IPSP in the right dIN (third trace, magenta). It takes time for the right dIN to recover from this IPSP, but when it does, it generates a rebound spikes. This then generates a spike in the right cIN (fourth trace, blue), which feeds back to inhibit the original left dIN (back to the top trace). And so the sequence continues. The key element determining the cycle period in this circuit is the recovery time from the IPSP. This depends in part on the properties of the IPSP itself, but also on the voltage-dependent characteristics of the long-term EPSP in the dIN.

- Remove the vertical cursor by right-clicking anywhere in the Results view, and selecting Del all vert cursors from the context menu.

Spike-Triggered Display

A useful technique that can emphasise the timing and consistency of synaptic interactions is to use a spike-triggered display (see e.g. Fig 3c in Roberts et al., 2010).

In the Results view:

- Clear the screen.

- Select Spike triggered from the Trigger mode frame.

- Set the right-hand timebase axis to 50 ms.

- Uncheck the Auto clear box.

- Click Start.

Multiple sweeps are superimposed, each showing one cycle of swimming. The important point is that the sweeps are all aligned so that the membrane potential of the left dIN (specified as the trigger neuron, 1) crosses 0 (pre-defined as the synaptic trigger level in the neuron properties dialog) at exactly 5 ms (the specified pre-trigger delay) after the start of the sweep display.

Because the left dIN spikes (top trace, brown) are all aligned and the dINs drive the ipsilateral cINs with a fixed synaptic delay, the left cIN spikes (second trace, green) are also aligned. The left cIN spikes cause IPSPs in the right dINs (third trace, magenta), which are aligned, and these generate rebound spikes in the right dINs, which activate the right cINs (fourth trace, blue). And this leads to the next cycle.

The variability in the display is because the swim pattern takes a few cycles to "settle down" after initiation. You can see this by looking selectively at individual sweeps:

- Set the Highlight sweep to 1 and mentally note the display. Pay attention to the orange trace (the right dIN) - this is a good monitor of activity.

- Advance the Highlight sweep value by clicking the up arrow on the spin button until you reach sweep 10 (there are 25 in total).

You should see that the first few sweeps vary, but after about sweep 6 the pattern stabilizes, and subsequent sweeps are identical.

To get an overview of the "typical" pattern you can look at the average of the sweeps:

- Select the Analyse: Average menu command.

The Results view now shows each trace as the point-by-point average of all 25 sweeps. The raw sweeps are shown in grey, while the average is shown as a highlighted trace. The average spike peaks are smaller because of the variation in spike timing in the early sweeps, but the overall pattern of activity is very clear.

Swim initiation

In the simulation above, swimming is initiated by separate stimuli to the left and right dINs, but these stimuli have a 40 ms time delay between them (stimuli 1 and 2 occur at 25 and 65 ms respectively). This is a bit unrealistic. It is hard to image how a single sensory stimulus could drive the two sides with such a long delay between them - tadpoles are very small, and axonal conduction across the body could not take more than a few milliseconds.

What happens if the two sides are driven simultaneously?

- Reload the parameter file Tadpole Core CPG to get back to the starting conditions.

- Change the delay for stimulus 2 from 65 to 25 ms (the same as stimulus 1).

- Click Start.

The circuit still produces activity, but the two sides spike synchronously (left and right dINs spike together rather than in alternation), and the frequency is more than doubled. This would not produce an effective swimming behaviour. The cause of the synchronicity is that when the two sides are activated at exactly the same time, the crossed inhibition occurs immediately after each dIN spike, rather than with a half-cycle delay. However, this is a metastableLike a knife balanced on its edge - it will tend to fall one way or the other with any random perturbation. condition - if noise is added to the system, then sooner or later it collapses into the more stable pattern of left-right alternation.

- Load and Start the parameter file Tadpole Core CPG w Noise (you may want to set the Slow down factor > 0 to make the activity more easily visible).

The circuit is exactly the same as before, but random noise has been added to each neuron. The pattern (probably) starts in the high-frequency synchronous mode, but sooner or later it (probably) collapses into the alternating mode. You may need to run the simulation several times, since with random noise it is not possible to predict in advance when the switch will occur.

Surprisingly, synchronous activity does occasionally occur in the real animal (Li et al., 2014), but it has no known function and is probably a "mistake". It normally only lasts briefly, before relapsing into normal swimming. It is likely caused by the synchronous activity occupying a similar metastable state as seen in the simulation.

In the real animal it would not be satisfactory to rely on noise to perturb a metastable equilibrium, since the timing is unpredictable. To solve the swim initiation conundrum, we have to bring in another class of interneurons, the ascending interneurons (aINs). AINs are rhythmically active during swimming, but they inhibit ipsilateral neurons in the CPG - in particular, they inhibit the cINs.

- Load and Start the parameter file Tadpole Initiate (v5-2).

The Results view shows good swimming activity, but the Setup view shows that there is only one stimulus applied to the circuit to initiate swimming, so there can be no bilateral difference in stimulus timing.

The Setup view also shows that there are 3 new neurons in the circuit. N5 and N6 are aINs. They receive AMPA-receptor mediated EPSPs from their ipsilateral dIN (which is what makes them rhythmically active), and they make glycinergic inhibitory output to their ipsilateral cIN. N7 (at the top of the Setup view) is a left-hand sensory neuronThis neuron uses simplified integrate-and-fire spikes rather than the full HH formalism, since its only job is to generate PSPs in the neurons post-synaptic to it.. It makes bilateral excitatory input to both the dINs and the aINs. This is definitely an oversimplificationFor instance, there are other interneurons interposed between the sensory neurons and the cpg neurons. of the real circuit, but it conveys the essential features.

- Click the expand timebase (

) button in the Results tool bar three times to "zoom in" on the initiation stage of swimming.

) button in the Results tool bar three times to "zoom in" on the initiation stage of swimming. - Activate a vertical cursor (click

in the Results toolbar), to give yourself a datum to see relative timing.

in the Results toolbar), to give yourself a datum to see relative timing. - Align the cursor on the sensory neuron spike (bottom trace).

The key point with respect to swim initiation is that there is a delay in the sensory activation of the contralateral aIN. All synaptic delays in the simulation have been set to 1 ms, except the N7-to-N6 connection, which has a delay of 3 ms. The following sequence takes you through the consequences of this delay:

- On the left-hand side (ipsilateral to the stimulus), the aIN (trace, orange) spikes immediately following the stimulus. This left aIN spike is early enough to inhibit the cIN on that side (2nd trace, green), and prevent it from spiking.

- This means that the right dIN (4th trace, magenta) does not receive an IPSP immediately after its spike (as it did during synchronous activity above), and does not generate an immediate rebound spike.

- On the contralateral side, the extra 2 ms in the activation time of the right aIN (6th trace, dark green) means that the right cIN (5th trace, blue) can "escape" and is not inhibited. Note that the left and right cINs are activated at exactly the same time, but the left cIN receives the aIN IPSP before it spikes (which prevents it from spiking), while the right cIN does not receive the aIN IPSP until after it spikes.

- This means that the left dIN (1st trace, brown) does receive an IPSP immediately after its first spike, and its second spike therefore occurs early. However, after this one early double-frequency spike, the rest of the swim episode shows the normal alternating pattern.

This asymmetry in dIN activation essentially replicates the 40 ms relative delay in dIN activation used in the core circuit simulation to initiate swimming, but it does it using only a 2 ms difference in activation time between aINs on the left and right sides of the animal, which is within a plausible biological range.

Take-home message: The swimming rhythm in hatchling tadpoles is primarily driven by spikes in descending interneurons (dINs). These spikes activate commissural interneurons (cINs), which in turn inhibit contralateral dINs. The dIN spikes themselves result from post-inhibitory rebound from this contralateral inhibition, superimposed on an NMDA-receptor mediated background depolarization. The background depolarization is caused by mutual re-excitation between dINs.

Short-Term Motor Memory and the Sodium Pump

Tadpoles swim in episodes that can last from a few seconds to several minutes (although in the simulations above, they last indefinitely). In a real tadpole, an episode can be terminated abruptly by a mechanical stimulus to the cement gland on the head, such as a “nose bumpThe sensory input induced by the stimulus activates GABAergic neurons in the hindbrain, which in turn inhibit the spinal circuitry involved in generating the swimming rhythm.” collision with an obstacle (or even the under-meniscus of the water surface). However, even without such acute sensory inhibition, natural swimming frequency within an episode gradually slows, and eventually the episode self-terminates. If a second swim episode is induced by appropriate excitatory stimulation within a minute or so after the natural termination of the previous episode, the duration of the second episode can be substantially reduced. The shorter the gap between the episodes, the greater is the shortening effect. It thus appears that the spinal circuitry "remembers" its previous activity for a brief period, and this memory affects its subsequent output. This phenomenon has been termed short-term motor memory (STMM: Zhang & Sillar, 2012).

The mechanism underlying STMM in tadpoles is, at least in principle, surprisingly simple. At the termination of an episode of swimming, the membrane potential of most spinal neurons, including cINs, shows a small (5-10 mV) but significant period of extended hyperpolarization. This can last for up to a minute (similar to STMM), with the potential gradually returning back to its pre-swim resting level during this period. Thus, if the second swim episode is elicted during this ultra-slow after-hyperpolarization (usAHP), the duration of the second episode is reduced. If the gap between episodes is short, the usAHP amplitude induced by the first is still relatively large and the STMM effect is pronounced. If the gap is longer, the usAHP amplitude has decreased and the STMM effect is reduced. If the gap is sufficiently long that there has been full recovery from the usAHP, then there is no STMM, and the second episode duration is normal.

So the next question is, what causes the usAHP? This too has a quite simple explanation. During the swim episode the cINs spike repeatedly at up to 25 Hz, and during this period, there is a substantial inflow of sodium ions into the neuron, carried in part through the voltage-dependent sodium channels of the spike itself, and in part through the AMPA-receptor mediated EPSPs that drive the cIN spikes. The sudden influx of sodium overwhelms the constitutive sodium clearance mechanism (the standard Na/K ATPase: the “sodium pump”), so the intracellular sodium concentration starts to rise. This activates a specific alpha-3 sub-type of sodium pump which has a lower affinity for sodium and is normally silent, but is activated by high concentrations of intracellular sodium. This “dynamic” sodium pump, like the standard pump, is negatively electrogenicIt pumps 3 Na+ ions out for every 2 K+ ions it pumps in, leading to a net hyperpolarizing current. and it is this which causes the usAHP. As the excess sodium is cleared from the neuron, the dynamic pump activity declines, and thus so does the usAHP.

- Load and Start the parameter file Tadpole STMM.

A pair of stimuli is applied to the dINs to elicit an episodeNote the very slow timescale, so individual cycles within the episode merge on the screen to appear as a solid block. of swimming. Unlike in previous simulations, the episode self-terminates due to the development of the usAHP. [Note that there are other mechanisms that contribute to self-termination of swim episodes (e.g. Dale, 2002), but they are not implemented in this simulation.]

The top trace (green) shows activity in the left cIN during the episode. The vertical scale has been expanded to "zoom in" on the base membrane potential, to make the time course of the usAHP clearly visible. Before the episode the resting membrane potential is -60 mV, immediately after the episode it has hyperpolarized to about -65 mV (peak usAHP), and then it slowly recovers back to -60 mV over about a minute. The second trace (blue) shows the right cIN at a normal scale, so that the spikes are fully visible. The usAHP is identical in this neuron, but less obvious at this scale.

The episode terminates because the usAHP eventually causes the dIN-mediated EPSP in the (left) cIN to drop below threshold. This breaks the feedback loop, and terminates the episode.

The bottom trace (purple) shows the intracellular sodium concentration in the left cIN. It rises during the swimming episode, and then falls again after the episode, as the dynamic sodium pump restores the concentration to its resting level. The 4th trace (black) shows the hyperpolarizingThe pump current sign convention follows that of a normal ionic channel, so an upward deflection indicates an outward (hyperpolarizing) positive current. current generated by the dynamic pump, which mirrors the change in sodium concentration, and which is directly responsible for the usAHP.

Implementation details: The circuit is identical to that shown previously, but the voltage-dependent sodium channels and the AMPA receptors in the cINs have been set to have sodium as their carrierAMPA receptors are mixed cation channels, so the sodium component is set at 50% of the total current. ion. This is achieved through the relevant Properties dialogs. In addition, the cINs have a sodium concentration-dependent electrogenic sodium pump, which generates the usAHP. The dINs are unchanged from the previous simulations. (They do in fact have a putative usAHP, but this is masked by a hyperpolarization-activated current Ih (Picton et al., 2018) and so is ignored in this simulation.) Note that quantitative parameters determining sodium concentration and pump rate are heuristic - there is no physiological evidence for the values in this system .

- With stimulus 1 selected, click the Gauss selection for the stimulus. Repeat for stimulus 2.

- Click Start (no need to clear the results, because Auto clear is pre-selected).

The Gauss stimulus option has been set up to deliver two episode-initiating stimuli to the dINs, with the second occurring about 7 s after the termination of the first episode. The second pair of stimuli successfully initiate another swim episode, but the duration of this second episode is much shorter than that of the first. This is short-term motor memory!

- Select the Analyse: Timing menu command to open the Frequency etc. vs Time dialog.

- Set the top scale of the Y axis to 50 Hz.

The decline in cycle frequency within each episode, and the shorter duration of the second episode, are clearly visible.

- Close the dialog.

The cause of the reduced duration of the second episode is fairly obvious from the sodium pump current trace (black, fourth trace). At the time of the start of the second episode the pump current has only slightly declined from its peak level, and so as more sodium floods into the cell (purple, bottom trace) the pump current soon returns to the level that was sufficient to render the cIN EPSP sub-threshold, and thus to terminate the second episode.

- Change the Mean interval value for both stimuli from 40 to 60 s. This will delay the second swim-initiating stimulus.

- Click Start.

The gap between the end of the first episode and the start of the second is now longer and the dynamic pump has had more time to clear sodium from the cell, and so its current level is reduced. It therefore takes longer to return to the peak level that blocks the cIN EPSP, and the duration of the second episode is extended, although still considerably shorter than that of the first.

Take-home message: A dynamic sodium pump in cIN neurons generates an ultra-slow after hyperpolarization (usAHP) in response to the increase in intracellular sodium concentration that occurs during a swim episode. If a second episode is initiate before the usAHP has decremented to rest level, the duration of the second episode is reduced in a gap duration-dependent manner. This mediates a short-term motor memory (STMM).

Finally, it should be noted that there is increasing evidence that sodium pump-induced usAHPs are widespread in the nervous system of many animals. In some cases they may mediate STMM as in the tadpole, in others they may have different functions, such as protecting from excitotoxicity-inducing hyperactivity in hippocampal neurons (see Picton et al., 2017, for references).

Synchronization and Entrainment

People who study oscillators (particularly physicists) distinguish between synchronization and entrainment. Synchronization occurs when independently-rhythmic entities interact with each other bi-directionally to produce a coordinated system response. Entrainment is when the interaction is one-way. Thus our circadian clock is entrained by the external day-night light cycle (our internal biological clock does not affect the rotation of the earth!), but the multiple neural oscillators in the suprachiasmatic nucleus that maintain our clock synchronize each other by mutual interaction.

Entrainment

- Load and Start the parameter file Entrainment.

The single neuron shows an endogenous rhythm. It does not have full H-H spikes, but that doesn’t matter for our purpose, what matters is that it oscillates at a fixed frequency which is determined by its intrinsic properties.

- Without clearing the screen, set the Amplitude of Pulse 1 to 0.04 (a single click on the up arrow of the spin button should do it).

- Click Start.

A repetitive stimulus now occurs with a frequency which is slightly faster than the endogenous rhythm. The stimulus continuously “pulls” the oscillation forward, so that on each cycle it occurs slightly earlier than its endogenous frequency. This is an example of entrainment, and the stimulus could be called a forcing stimulus.

- Use the Highlight sweep facility in the Results view to look at each sweep in turn to make sure you understand what is happening.

- Select the Analyse: Timing menu command to open the Frequency etc vs Time dialog, and move the dialog so that it does not obscure the Results.

Note that a red horizontal cursor has appeared in the Results view. This acts as a threshold for spike detection.

With the default settings, the dialog displays the instantaneous frequency of spikes in the Results view. A "spikeIt doesn't have to be an actual spike. Any oscillation that goes above threshold and then drops below threshold is regarded as a spike." is regarded as occurring during a time period in which the membrane potential goes above the red cursor. Instantaneous frequency is the reciprocal of the time interval between the onset of spikes, so there is one fewer frequency value than there are spikes in the display. Various other analysis options are available within the dialog, but they are not used in this activity.

- The default threshold value is suitable for this analysis, but if it were not you could adust its position by changing the Threshold value within the dialog, or simply by dragging the red cursor to a new location in the Results view.

The row of dots across the dialog screen shows the instantaneous frequency of the oscillation.

- Check the Autoscale Y box near the bottom of the dialog to get a more accurate view of the frequency.

- Hover the mouse over a dot, and read the frequency as the Y value displayed to the left of the graph near the bottom of the dialog. It should be about 1.6 Hz. (You could also just look at the Y axis scales.)

This is the frequency of the oscillations in sweep 1 (the sweep ID is shown at the top-left of the dialog), which is the endogenous rhythm without the forcing stimulus.

- Check the Concat[enate] sweeps box near the top of the dialog.

Both sweeps are now displayed in sequence in the frequency graph. Note that in the second sweep, on the right side of the dialog graph, the frequency increases to 1.8 Hz. This is the result of the forcing stimulus entraining the rhythm to a higher frequency.

How far can a forcing stimulus push a natural rhythm to change its endogenous frequency? The answer depends entirely on the properties of the stimulus and of the mechanism generating the natural rhythm. But we can explore this a bit in the current simulation.

- Click Clear in the main Results view. (The frequency graph now indicates that there are no data for analysis.)

- Change the sweep duration to 20 s by editing the right-hand x axis scale. This will give us more time in which to establish a rhythm.

- Successively set the stimulus amplitude to 0 (zero), 0.04 and then 0.03, clicking Start after each change. Do not clear the screen between runs.

The first two sweeps exactly repeat the previous experiment, but the third sweep attempts entrainment with a weaker forcing stimulus. The frequency graph should now look like this:

The weaker stimulus attempts to increase the rhythm frequency, but periodically fails. This can be seen more clearly by looking at the individual sweeps.

- Uncheck the Concatenate sweeps box in the frequency dialog.

- View Sweeps 1, 2 and 3 successively.

To see what is actually happening we need to zoom on the relevant section in the Results view.

- Set the start time (left-hand timebase axis) to 5 s and the end time (right-hand timebase axis) to 9 s.

- Switch the Highlight sweep between 2 (with the 0.04 nA stimulus) and 3 (with the 0.03 nA stimulus).

You can see that with the stronger stimulus (sweep 2) every cycle of the rhythm is entrained: there are 7 stimulus pulses visible in the display and 7 spikes, so each stimulus is followed by a spike. However, with slightly weaker stimulus (sweep 3) there are still 7 stimulus pulses, but only 6 spikes. The rhythm "skips a beat" on the 4th visible stimulus.

Take-home message: A periodic forcing stimulus can entrain an endogenous neural oscillator to a different frequency, but only within certain range.

Synchronization

Most real oscillator circuits involve a pool of neurons with quite similar properties, rather than just single neurons. This makes the circuit more robust, since individual neurons in the pool can be damaged or destroyed without seriously impairing the circuit as a whole. However, there has to be some way of synchronizing the neurons, and this is typically accomplished by electrical coupling between local neighbours. This enables a neuron which is oscillating too rapidly to be “pulled back” by current drain into its more restrained neighbours, while one which is going too slowly will be helped along. This process is called synchronization.

WARNING: The following simulation involves flashing multi-coloured lights. If you could be adversely affected by this sort of visual stimulus, you should use Neuron: Colours: Edit colour map to change the map to monochrome, or remove the colours entirely by unchecking Colour from voltage.

- Load and Start the parameter file Synchronization.

There is a 10 x 10 matrix of neurons, each of which is a non-spiking endogenous burster. The bursters have identical properties and they start off synchronized, but each has a substantial amount of membrane noise which will randomly perturb its rhythm. In the Setup view the neurons are colour-coded by their membrane potentials, and 4 neurons from the circuit are shown in detail in the Results view (the arrangement of 2 per axis is just to aid comparison).

- After admiring the pretty colours for a few moments, click Pause while you read on.

Each neuron is connected to its neighbours by electrical synapses. For clarity these have been hidden, but they can be revealed by deselecting the Connexions: Hide connexions menu option. BUT, in the default configuration as loaded, all the electrical synapses are blocked by the drug NEM (n-ethylmaleimide, a gap-junction blocker).

Because the electrical synapses are blocked, each neuron free-runs at its own intrinsic rhythm, and due to the random noise, they rapidly desynchronize.

- Click Continue to do just that.

- While the simulation continues to run, uncheck the NEM box in the Experimental Control panel.

This unblocks the electrical synapses, and the neurons rapidly synchronizeI was once lucky enough to see synchronized firefly flashing in the North Georgia mountains while on a family holiday. The similarity in visual appearance to the output of this simulation was striking, and the underlying mechanism of nearest-neighbour coupling is probably similar.. As soon as one neuron enters its depolarized phase, it “pulls” the others along after it, and a wave of depolarization sweeps across the whole network. Over time, the pattern of this wave can change, since the membrane noise means that it will not always be the same neuron that takes the lead role.

- Check and uncheck the NEM box a few times, and observe synchronization and de-synchronization.

- When you have seen enough, click Stop, and Clear the Results view display.

Spike vs Time mode

An alternative visualization can be achieved in the Spike vs Time mode.

- Select Spike vs Time in the Display mode group of the Results view.

- Click Start.

- If you want to speed things up, de-select the Neuron: Colours: Colours from voltage menu option (you may now need to set a non-zero Slow down factor).

- As the simulation progresses, check and uncheck the NEM box in the Drugs group of the Setup view to see the network move in and out of synchronization.

Metachronal Rhythm

In the previous simulation, all the oscillators had the same intrinsic frequency. However, this does not have to be the case.

- Load and Start the parameter file Metachronal Rhythm.

We have 5 neurons linearly coupled by electrical synapses into a nearest-neighbour chain, although as before, the synapses are initially blocked by NEM.

Each neuron is an oscillator, and can be thought of as representing the segmental CPG in a chain of ganglia such as might control, for instance, the legs of a centipede. The CPGs are not identical. The intrinsic frequency follows a segmental gradient, with N5 (the most posterior) being the fastest, and each segmental homolog being slower as they ascend rostrally in the chain. With the coupling blocked, each CPG free-runs at its intrinsic frequency.

- Watch the pattern of activation by observing the colour changes of the neurons in the Setup view.

After a short while, the coordination appears chaotic. Each neuron is oscillating at its own frequency, and the peaks drift past each other as the simulation progresses, producing an apparently random pattern of colour changes in the chain of neurons.

- Uncheck the NEM box in the Experimental Control panel.

After a few cycles, the CPGs now become synchronized. However, N5 always leads the rhythm, with the other segments following in sequence. Thus a metachronal rhythm is generated, in which a wave of excitation sweeps rostrally through the chain.

- While the simulation continues to run, select the Neuron: Zap menu option (the simulation then stops), and click on the central neuron N3 (the simulation restarts). This kills that neuron.

With the central oscillator removed from the circuit, the two ends now oscillate independently. The posterior end oscillates at relatively high frequency, driven by N5 as its pacemaker. The anterior end oscillates more slowly, with N2 acting as its pacemaker.

- You can use the Neuron: Un-zap facility to restore N3 to the circuit, and the faster rhythm now resumes throughout.

This very simple model uses just one of the many mechanisms that can produce a metachronal rhythm. However, it can generate testable hypotheses. It predicts that if you remove the central oscillator, perhaps surgically, or perhaps, more reversibly, by pharmacological intervention, the two ends will continue to produce coordinated oscillations, but at different frequencies. If you did that in a real system and found, for instance, that the back end continued to oscillate but the front end shut down, then this means that your system must be using a different mechanism to this model.

Stochastic Resonance: Noise Matters

Noise, in a signal processing context, refers to random fluctuations in a signal which do not have any information content relevant to the signal itself. Noise is usually thought of as a bad thing because it corrupts the signal - the receiver has no way of knowing which fluctuations in a signal are noise, and which are the information of interest. However, in some contexts, noise can actually be a good thing. In particular, in sensory systems, noise can enhance the sensitivity of the receiver to small signals through a process known as stochastic resonanceStochastic resonance theory was originally developed specifically for oscillating signals, but the term is now generally taken to include any situation in which noise enhances the performance of a non-linear signal processing system (McDonnel & Abbot, 2009). .

- Load the parameter file Stochastic Resonance.

- Check the Spike frequency graph box in the Display mode group of the Results view to open the Spike Frequency graph, and move its window to a convenient location. It is dockable, but there is no need to dock it anywhere unless you want to.

- This graph will show the average spike frequency in the two neurons, calculated after each simulation run. At the moment it is empty, because there has not been a run.

- Click Start.

The Setup shows two spiking sensory neurons (N1 and N2), receiving an identical stimulus input. The two sensory neurons are themselves identical, except that N2 has added noiseThe noise is generated by random current fluctuations following an Ornstein-Uhlenbeck distribution. This is a good approximation to noise generated by the random opening and closing of ion channels (Linaro et al., 2011). . The default stimulus amplitude is quite small (0.08). This generates a voltage response in N1 which is definitely below threshold, so N1 does not spike. It is also very unlikely that N2 spikes (unless the noise takes an exceptionally positive random value), so the stimulus is undetected by either sensory neuron.

- Click the up arrow on the stimulus amplitude in the Experimental Control panel to increase it slightly.

The Options: Run on change menu toggle has been pre-selected, so a new simulation runs when you change the stimulus amplitude. However, the stimulus is still quite small and it is unlikely to generate spikes in the sensory neurons (it certainly won’t in N1, it probably won’t in N2). Note that the purple bars in the frequency graph are both at or close to zero.

- Repeatedly click the up arrow on the stimulus amplitude until it reaches a value of 0.15, observing the traces in the Results view and the bars in the frequency graph, at each change.

As the stimulus amplitude increases, you should start to see occasional spikes in N2, which is the sensory neuron with added noise. The frequency of these spikes increases as the stimulus strength increases (note the N2 purple bar rises higher in the frequency graph). However, N1 remains silent even though it receives the same input.

Remember that N1 and N2 are identical apart from the noise, and so have exactly the same spike threshold. However, as the membrane potential in N2 gets close to threshold, the added noise occasionally lifts it above threshold, hence the spikes. N1 remains silent because without noise its threshold is never reached for these stimuli.

- Click the up arrow on the stimulus amplitude once, to take it to a value of 0.16.

The stimulus now finally crosses threshold in N1, which consequently generates spikes. However, the spike frequency in N1 immediately jumps to about 30 Hz – there is no graded low-frequency response like there was in N2.

- Click the up arrow on the stimulus amplitude twice more until it reaches a value of 0.18, observing the traces in the Results view and the bars in the frequency graph, at each change.

Both neurons now respond with approximately the same increasing spike frequency as the stimulus strength increases. Thus the noise has not reduced the coding capability of N2 relative to N1 in terms of the average spike frequency above N1 threshold, although the fine-timing capability is reduced due to the increased uncertainty in the exact moment at which threshold is crossed.

Take-home message: The noise increases the sensitivity of N2, so that it can respond to weaker stimuli than N1, even though they both have the same absolute spike threshold. Furthermore, the noise increases the dynamic range of N2, so that it is able to code the weaker stimuli with lower frequency spikes.

A key requirement for the occurrence of stochastic resonance is that the detecting system should be non-linear. The sensory neurons are highly non-linear because they generate spikes. When the input signal is below threshold they have zero output, when it is above threshold they generate spikes in which the input amplitude is coded by the output frequency. It is this non-linearity that causes noise to benefit signal detection. (If the neurons were non-spiking and their output was mediated by non-spiking synapses, then the noise would simply contaminate the output and be of no benefit whatsoever.) Of course, even in a non-linear system there is a limit to the benefit – if there is too much noise the signal will just become lost in the noise. In fact, for time-varying signals there is usually a single optimum noise level, which is why “resonance” is part of the name originally coined for the process.

Finally, it is worth pointing out that some level of noise is absolutely inevitable in any biological system, so all spiking sensory neurons will benefit from stochastic resonance to some extent. In man-made systems, engineers sometimes add noise specifically to optimizeThis is the principle that underlies dithering in commercial analogue-to-digital conversion processes. stochastic resonance, but I am not aware of any evidence for specific biological adaptations tuning the level of intrinsicIt has, however, been shown that adding noise to an external stimulus can enhance its detection through stochastic resonance (e.g. Levin & Miller, 1996). noise in sensory neurons to enhance their detection capability.

Lateral Inhibition

From an evolutionary perspective, paying attention to changes in the environment is probably more important than focussing attention on things that just carry on without change. The change can be something in time – the sudden noise that alerts you to the presence of danger, or in space – the visual line that demarcates the edge of a narrow path with a steep drop on one side. So it is not surprising that the nervous system is especially tuned to detect such changes.

One well-known mechanism for emphasising edges in a spatial field is lateral inhibition. This occurs in spatially-mapped senses such as the visual system, and it refers to the ability of a stimulated neuron to reduce the activity of neurons on either side of it.

- Load the parameter file Edge detector. (If you are using v5-2 the file Lateral inhibition 1D shows a similar effect.)

This is a concept simulation loosely based on the vertebrate retina. The top row is an array of spatially-mapped non-spiking receptors (or bipolar on-cells in the retina). Each neuron in the row inhibits those on either side of it through a non-spiking chemical synapse, thus mediating lateral inhibition. (The connections have been hidden for clarity, but can be revealed by deselecting the Connexions: Hide all connexions menu option.) The middle part of the array (N10 - N20) will receive a brief stimulus delivered through the square boxes when you run the experiment.

The lower row is an array of spiking interneurons (perhaps ganglion cells in the retina). Each interneuron is activated by its partner receptor in the row above through a non-spiking excitatory chemical synapse. The interneurons have some added membrane noise to enhance their sensitivity through stochastic resonance.

- Click Start.

The Results view shows activity in the receptor layer, but the Display mode has been set to Voltage vs Neuron. This means that the X-axis represents the individual neurons in the receptor array (N1 - N30), and the Y axis represents the membrane potential of each of those neurons. The whole display evolves over time, before stabilizing, at which point dots are drawn to show the potential of each neuron.

The response shows the clear edge-enhancement produced by lateral inhibition. If you hover over the two "cat's ear" peaks, the status bar shows that they are generated by N10 and N20, which are the two receptors just on the inside edge of the stimulus, while the adjacent troughs are generated by N9 and N21, which are just on the outside edge. The network thus differentially amplifies the response at the transition from unstimulated to stimulated receptors, compared to the stable conditions within the “body” of the stimulus, although there is still a clear difference in activity level in that region too.

Task: Think through how lateral inhibition produces the edge-enhancement that the simulation demonstrates. Explain it to a friend!

- Check the picrotoxin box in the Experimental control panel to block the lateral inhibition.

- Click Start.

- Use the Results Highlight sweep facility to look at the two sweeps in turn.

With the picrotoxin applied, the receptor array response is simply proportional to the strength of the stimulus – there is no edge-enhancement.

It is worth noting that lateral inhibition supresses the overall activity level compared to what it is without inhibition, and also reduces the difference in activity between the unstimulated response and the response in the “body” of the stimulus. However, the enhancement in the difference seen at the edge seems to be a worthwhile trade-off, given that lateral inhibition has been found in many sensory processing systems in many animals.

What happens in the spiking interneuron layer?

- Uncheck the picrotoxin box to restore lateral inhibition.

- Clear the Results.

- Select Spike vs Time in the Display mode group.

- Click Start.

The Result view now shows a raster plot of the spikes in the interneuron layer (N31 - N60), with each dot representing a spike.

It is very clear that N40 and N50 have a strongly enhanced spike rate during the stimulus. These are the interneurons that receive their input from the receptors just on the inside edge of the stimulus. The interneuron within the "body" of the stimulus spike occasionally, but at a much lower rate than those just inside the edge.

- Repeat the picrotoxin experiment to block lateral inhibition, and note that all interneurones within the stimulated region now have a high uniform spike rate.

To see the membrane potential responses of individual neurons:

- Uncheck the picrotoxin box to restore lateral inhibition.

- Clear the Results.

- Select Neuron vs Time in the Display mode group.

- Click Start.

The Results view shows the activity of a receptor and its paired interneuron outside of the stimulus (N5, N35; top two traces), just on the inside edge of the stimulus (N10, N40; middle two traces) and within the body of the stimulus (N15, N45; bottom two traces). Note that the spike responses will be variable due to the membrane noise, particularly in N45 which is close to threshold during the stimulus.

Take-home message: Lateral inhibition increases the perceived contrast at the edge of a spatially-mapped stimulus.

Pre-Synaptic Inhibition

One advantage of chemical synapses for communication between neurons is that they can be highly plastic - the strength of the connection can often be modulated, both on a long-term basis (e.g. long-term potentiation; LTP), and also on a moment-by-moment basis. One of the key mechanisms underlying the latter is pre-synaptic inhibition.

Standard post-synaptic inhibition is a familiar phenomenon - IPSPs impinge on a neuron and counteract the effect of any EPSPs occurring in the same neuron. Pre-synaptic inhibition is different - it occurs when an inhibitory neuron targets the release terminals of the excitatory pre-synaptic neurons that are delivering the EPSPs to the post-synaptic neuron, and prevents or reduces the release of transmitter. Pre-synaptic inhibition thus directly reduces the size of message impinging on the post-synaptic neuron, rather than merely counteracting its effects after it has already arrived.

Pre-synaptic inhibition is well established as a key mechanism for gating the flow of sensory information into the CNS. It would be quite difficult to stop a sensory neuron from actually responding to a peripheral sensory stimulus - this might require sending an inhibitory axon all the way to the periphery and inhibiting the sensory neuron at its transduction site. Post-synaptic inhibition could prevent a neuron from responding to sensory input, but it would also prevent it responding to any other input arriving at the same time. If an animal needs to block sensory input from a specific source but leave it responsive to other inputs, the answerThis is, of course, a totally post hoc argument - evolution often comes up with solutions to problems that do not seem to be the most efficient way of doing things. is to allow the sensory neuron to respond as normal, but to stop it from making output in the CNS, and thus prevent it from having any effect.

Primary Afferent Depolarization (PAD)

Pre-synaptic inhibition of afferent neurons in the vertebrate spinal cord is mediated by the release of GABA from inhibitory interneurons, which activates GABAA receptors in the afferent terminals. This leads to an increase in chloride conductance in the terminals, and a consequent IPSP. However, there is an unusually high concentration of Cl- within the terminal itself (due to the sodium-potassium-chloride co-transporter NKCC1), and so the equilibrium potential for Cl- is depolarized relative to resting potential. The IPSP is therefore depolarizing, as discussed previously in the Synapse part of the tutorial. This is called primary afferent depolarization (PAD) (Engelman & MacDermott, 2004).

Depolarizing inhibition is highly counter-intuitive at first sight, and yet it is ubiquitous in sensory gating, and quite common elsewhere too. So how does it work, and what are its advantages?

- Load and Start the parameter file Pre-Synaptic Inhibition (v5-2).

The Setup view shows a sensory (afferent) axon snaking its way into the spinal cord. The axon is simulated as a compartmental model, with each segment implementing HH-type spikes and connected to its neighbours by electrical synapses (for simplicity, these electrical synapses are hidden in the Setup view). A spike is initiated in the peripheral segment (N1, top-left in the Setup view, red) by sensory stimulus 1. The peripheral spike is shown in the Results view top axis as the red trace. The spike propagates along the axon into the CNS, where after a short delay it arrives at the terminal output segment (N21 in the Setup view, green; Results view top axis green trace).

To make this clear:

- Click Clear in the Results view.

- Select the Neuron: Colours: Colour from Voltage menu command.

- Click Start.

You should see the spike propagating in the axon as a series of colour changes.

- Go back to the normal display by reversing the steps above.

Within the CNS the sensory neuron makes an excitatory synaptic connection to a post-synaptic neuron (N22, blue; this could be a motorneuron or an interneuron). The EPSPTo make the EPSP sensitive to the shape of the pre-synaptic spike, it is generated by a non-spiking synapse with a high threshold for transmitter release. is visible in the Results view 3rd axis (blue trace). For clarity, N22 has been made a non-spiking neuron, but the EPSP is quite large and could well elicit a spike if the neuron were capable of generating one.

The last twoTwo segments are inhibited purely for simulation convenience - it was easier to demonstrate the phenomenon when two rather than one segment received the inhibition. I expect that with suitable adjustment of parameters a similar effect could be achieved by inhibiting just one segment, but since the segment boundaries are purely an artefact of the compartmental model methodology, this did not seem worth the effort. segments of the afferent axon receive pre-synaptic inhibition from an interneuron (N23, orange), but this is not activated in the default situation (no spike is visible in the lower axes of the Results view), so there is no inhibition.

- Set the amplitude of stimulus 2 to 8 nA in the Experimental Control panel to the left of the Setup view (you can do this by just clicking the up-arrow of the spin button).

- Run on change is pre-selected in the Options menu, so a new simulation runs, with traces superimposed in the Results view.

Several changes are visible:

- There is a spikeThis neuron uses simplified integrate-and-fire spikes rather than the full HH formalism, since its only job is to generate the IPSPs in the afferent terminal. in the pre-synaptic inhibitor neuron (orange trace: this is an integrate-and-fire neuron, so the spike is just shown as a vertical line), which is timed to occur just before the afferent spike arrives in the terminal segment of the afferent axon.

- A depolarizing potential is visible in the membrane potential of the terminal segment (green trace ) which starts before the spike arrives in that segment. This is the PAD generated by the pre-synaptic inhibitor.

- The afferent spike in the terminal segment is reduced in amplitude and duration compared to the spike without PAD.

- The EPSP in the receiving neuron (blue trace) is considerably reduced in amplitude - this is the result of the PAD-induced pre-synaptic inhibition.

- Use the Highlight sweep facility in the Results view Trigger mode group to switch between sweeps 1 and 2, to make sure that you understand the difference between the two.

Inhibition Mechanism

Why does the PAD generate inhibition? This is still a matter of some debate, but there are generally thought to be 2 effects at play.

- Shunting: The increased chloride conductance in the afferent terminal will act as a current shunt, which increases the leak of the spike-induced depolarizing current, and hence reduces spike amplitude. However, this would also occur with a hyperpolarizing IPSP, so it does not explain why depolarization is so common.

- Sodium inactivation / potassium activation: The depolarization starts to inactivate voltage-dependent sodium channels and activate voltage-dependent potassium channels just before the afferent spike invades the terminal region. This will reduce the peak spike amplitude and duration.

Either mechanism would reduce the activation of voltage-dependent calcium channels in the pre-synaptic terminal and hence the inflow of calcium, thus reducing transmitter release.

At this stage you should have 2 sweeps visible on the screen, the first showing the blue EPSP without pre-synaptic inhibition, the second showing it with the inhibition. (If you have cleared the screen, repeat the experiments above to get back to this stage.)

- Select the Synapses: Spiking Chemical menu command to open the Spiking Chemical Synapse Type dialog. This is non-modal, so you can keep it open while running simulations.

If possible, move the dialog box so that it does not obscure the main window.- Select synapse type b: pre-synaptic inhibition in the Type list.

- Note that the equilibrium potential is -61 mV, which is depolarized relative to the resting membrane potential of the post-synaptic neuron (the afferent neuron), which is -70 mV, hence the depolarizing nature of the IPSP.

- Change the equilibrium potential to -70 mV.

- This is the same value as the resting potential, so it should generate a flat (silent) IPSP, with no PAD, and consequently no pre-inactivation of the terminal sodium channels. Any inhibition would have to be a consequence of shunting.